Enhancing Fine-Tuning Based Backdoor Defense with Sharpness-Aware Minimization

Published in 2023 International Conference on Computer Vision, 2023

Recommended citation: Zhu, Mingli, et al. "Enhancing Fine-Tuning Based Backdoor Defense with Sharpness-Aware Minimization." 2023 International Conference on Computer Vision" https://arxiv.org/pdf/2304.11823

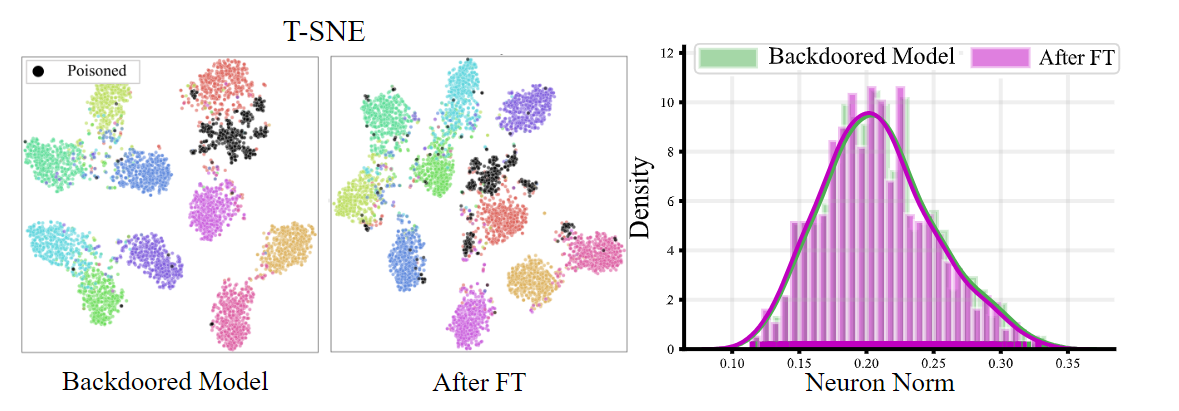

Backdoor defense, which aims to detect or mitigate the effect of malicious triggers introduced by attackers, is becoming increasingly critical for machine learning security and integrity. Fine-tuning based on benign data is a natural defense to erase the backdoor effect in a backdoored model. However, recent studies show that, given limited benign data, vanilla fine-tuning has poor defense performance. In this work, we provide a deep study of fine-tuning the backdoored model from the neuron perspective and find that backdoorrelated neurons fail to escape the local minimum in the fine-tuning process. Inspired by observing that the backdoorrelated neurons often have larger norms, we propose FTSAM, a novel backdoor defense paradigm that aims to shrink the norms of backdoor-related neurons by incorporating sharpness-aware minimization with fine-tuning. We demonstrate the effectiveness of our method on several benchmark datasets and network architectures, where it achieves state-of-the-art defense performance. Overall, our work provides a promising avenue for improving the robustness of machine learning models against backdoor attacks.